🎉 Clootrack recognized by OpenAI for crossing 100 billion tokens in Voice of the Customer analytics →

Read the story

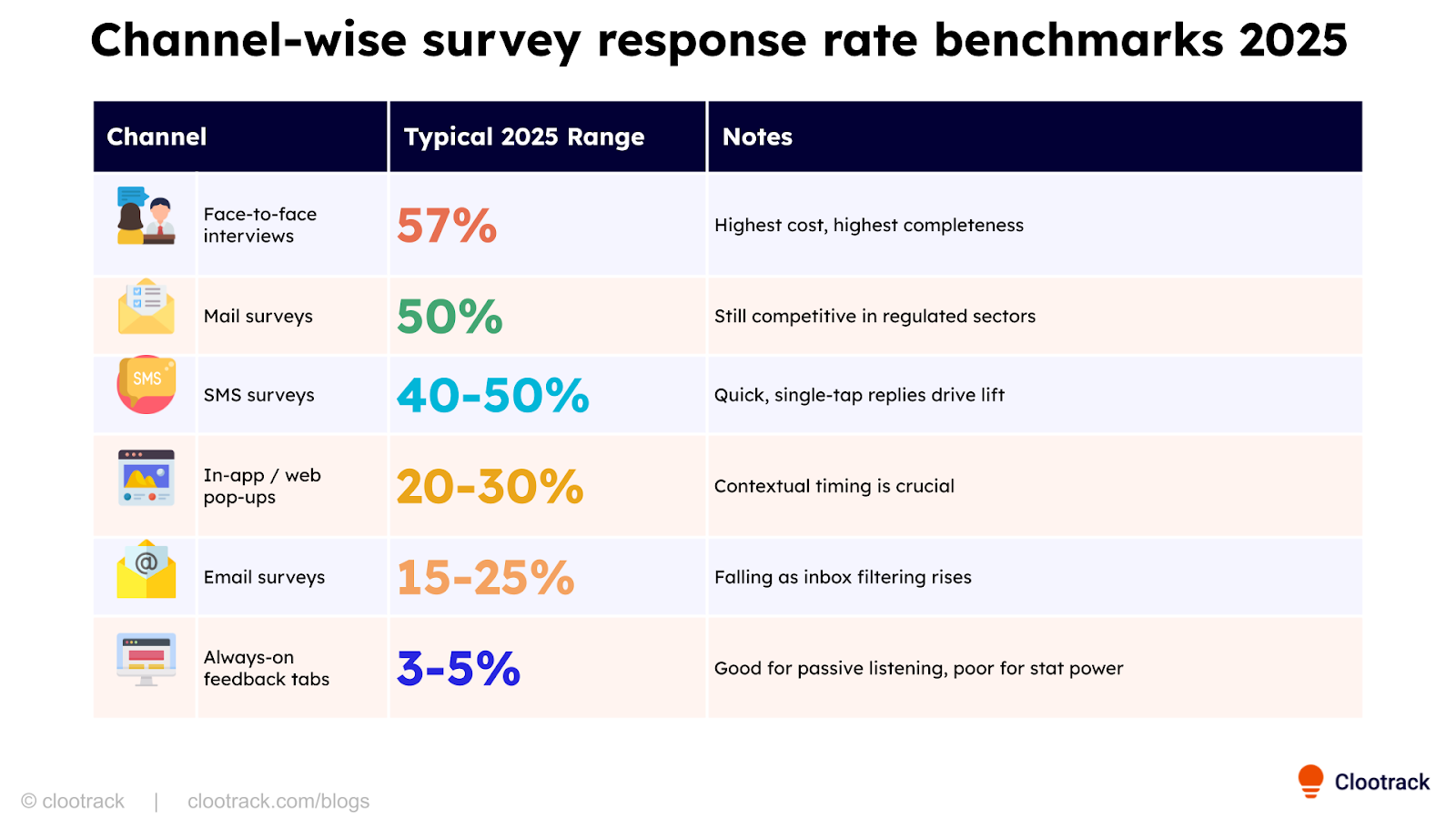

There is no universal “industry standard” because survey response rates vary widely by channel, sector, and audience type. For example, digital customer surveys via email and web usually average 20–30%, while SMS surveys often achieve 40–50%, and employee engagement surveys hover closer to 30–40%.

This variation means you can’t just Google one number and assume it applies. Instead, you need reports that:

To get data you can act on, CX teams should look at a mix of the following:

Platforms like SurveyMonkey, Qualtrics, Zendesk, and SurveySparrow regularly publish benchmark reports. These cover both channel-specific ranges and industry norms.

Average survey response rate in 2025: benchmarks & drivers

Sector-focused organizations often run their own surveys and publish aggregated response data. Examples include:

These reports are critical because they allow you to grade yourself against a relevant baseline rather than an abstract average.

Market research groups like Forrester and Gartner publish Voice of Customer (VoC) insights where response rate trends are embedded in broader CX strategy reports. They also highlight structural shifts such as:

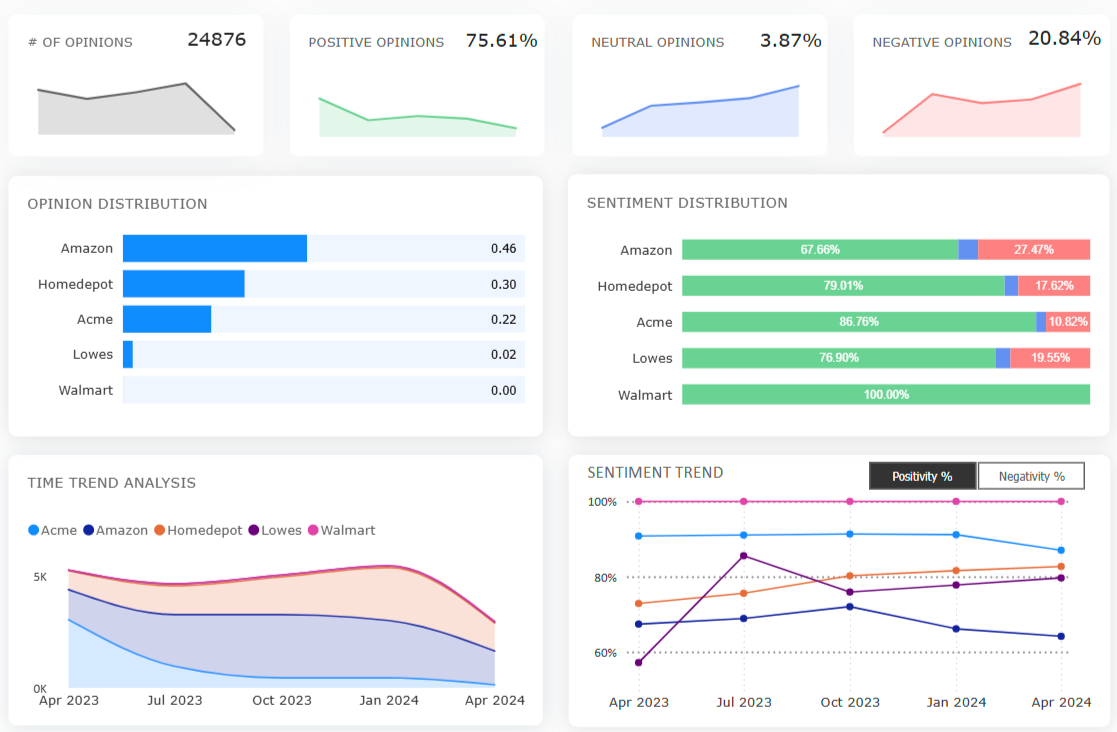

Beyond external benchmarks, the most actionable reports are generated by VoC and survey intelligence platforms that consolidate multiple feedback channels into a single dashboard.

For instance, the Clootrack AI feedback analytics tool aggregates:

By blending these sources, CX teams get unified survey response rate reporting that does two things:

This centralization ensures leaders don’t just monitor raw percentages but tie survey participation directly to business impact.

Simply knowing the average isn’t enough. Once you locate a report, apply it in three ways:

.png)

You can find CX survey response rate reports in many places: survey platform benchmarks, industry-specific studies, analyst research, and unified VoC platforms. But their true value lies in contextual interpretation.

Instead of chasing a “universal standard,” anchor on:

When survey data is centralized and contextualized, it stops being a vanity metric and becomes a true decision signal for CX strategy.

1) What is a CX survey response rate report?

A summary of completion rates by industry, channel (email/SMS/in-app), and audience type. Good reports also define the denominator (valid invites), sample sizes, and collection period so benchmarks are comparable.

2) What is a good survey response rate?

“Good” is context-dependent, but 10–30% is typical for customer surveys; 30%+ is strong, and 50%+ is exceptional or compliance-driven contexts. Validate with channel and audience specifics before setting targets.

3) How do you calculate customer survey response rate?

Response rate = (completed surveys ÷ valid invitations sent) × 100. Exclude bounces/undelivered to avoid understating performance; report channel, period, and sample size for apples-to-apples comparisons.

4) How can I quickly improve survey response rates?

Go mobile-first and use multi-channel (email + timely SMS nudges), keep surveys short (≤7 minutes) with skip/branch logic, and personalize invites. These tactics consistently lift starts and completions.

5) How should I benchmark my survey response rate?

Match like-for-like: industry, audience type (B2B/B2C), geography, and channel. Track your own trend by channel over time, then compare against two reputable external sources.

Analyze customer reviews and automate market research with the fastest AI-powered customer intelligence tool.