🎉 Clootrack recognized by OpenAI for crossing 100 billion tokens in Voice of the Customer analytics →

Read the story

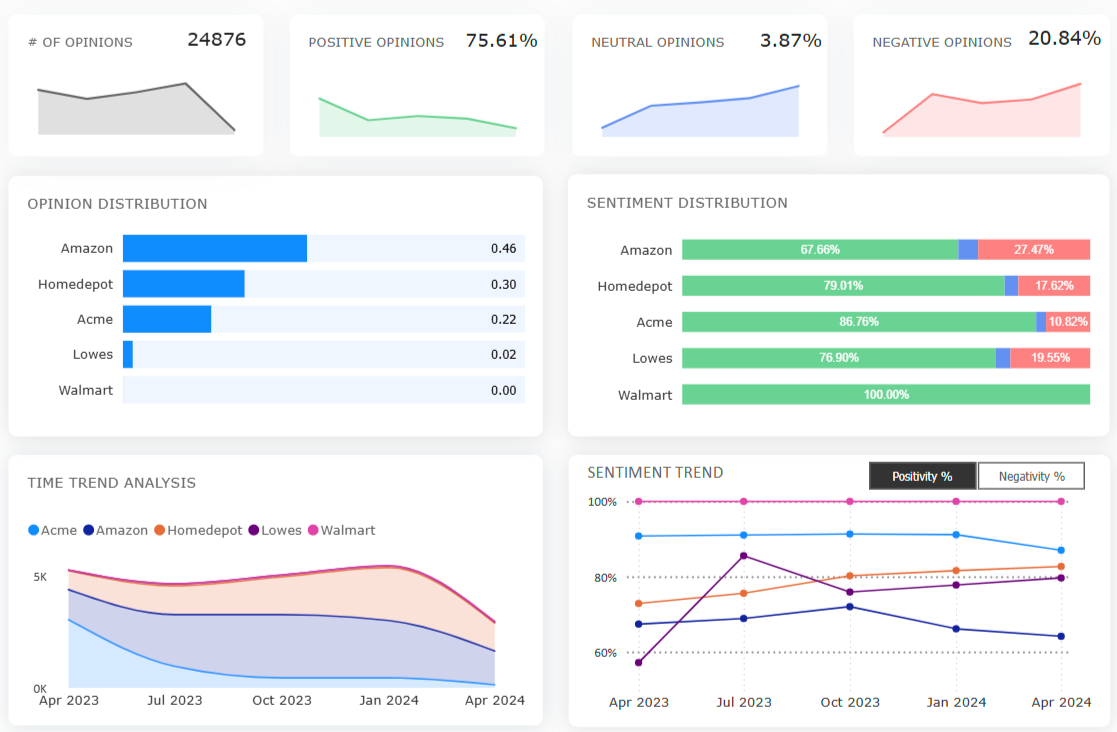

The question “What is an average survey response rate?” is deceptively simple. In 2025, the typical survey response rate for external digital questionnaires lands between 20% and 30%, but that headline hides channel, industry, and design effects, big enough to skew any Voice-of-Customer (VoC) dashboard.

This article clarifies the definition, the latest survey response rate benchmarks, and the practical moves that separate mere compliance from decision-ready customer feedback analysis.

Survey response rate = (completed surveys ÷ invitations sent) × 100.

Response rate = Number of people who completed the survey / total number of people you sent it to x 100

A 24 % rate means 24 of every 100 invitees finished the survey. Track completed responses, not views or partials, to avoid inflating your denominator.

There is no magic-number certification; “good” equals fit-for-purpose. Still, several 2025 studies converge on a broad yardstick: 20–30 % is respectable for most external online surveys.

SurveyLab’s June 2025 review pegs 20–25% as the “acceptable” range for email-based customer surveys, and 10–30% for internal employee polls.

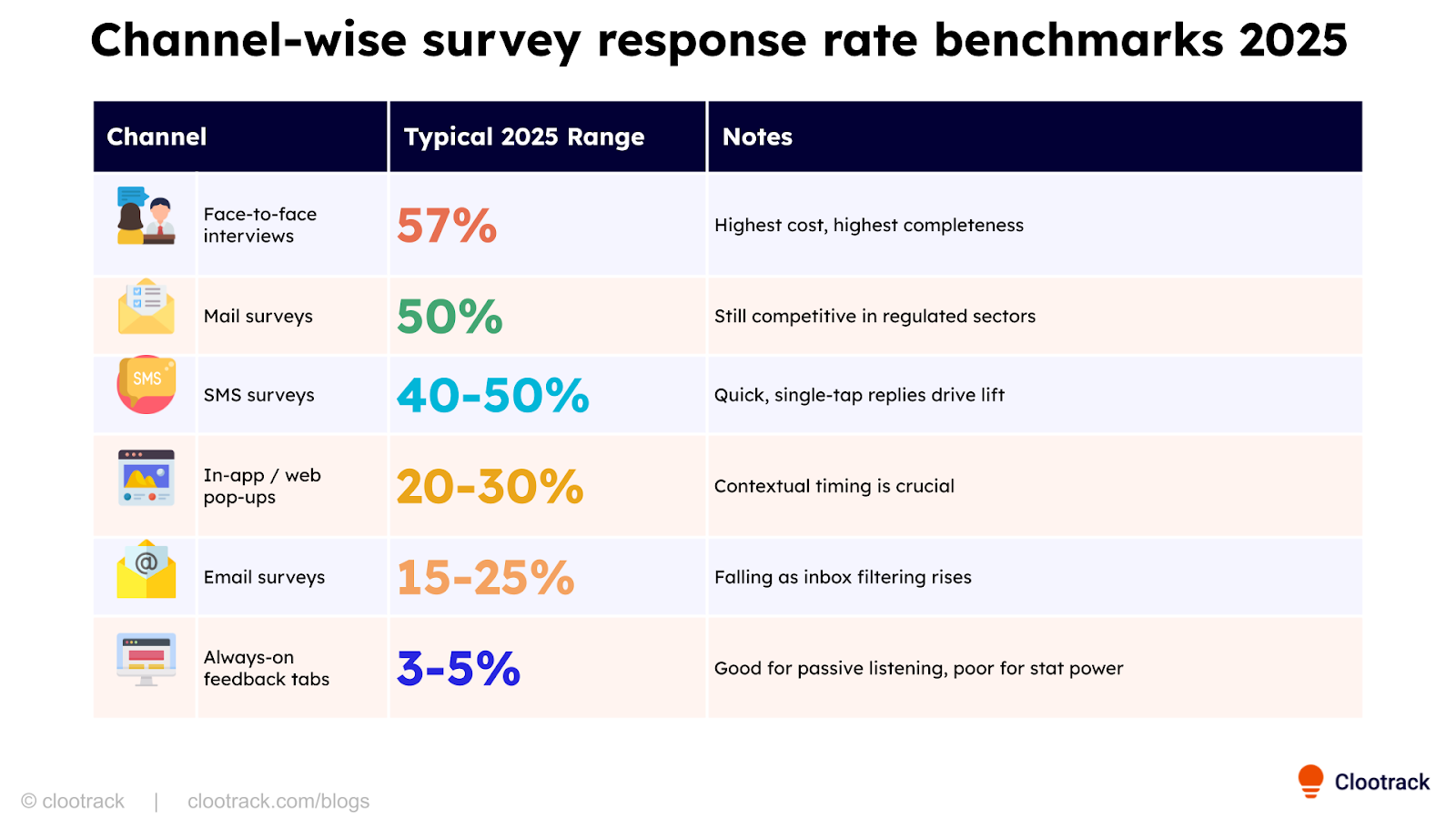

When you move outside email, norms shift fast. SurveySparrow’s July 2025 benchmark study shows channel medians swinging from single digits to almost 50% – a reminder to grade yourself against the right peer set, not a generic average.

↠ What is a good response rate for a survey?

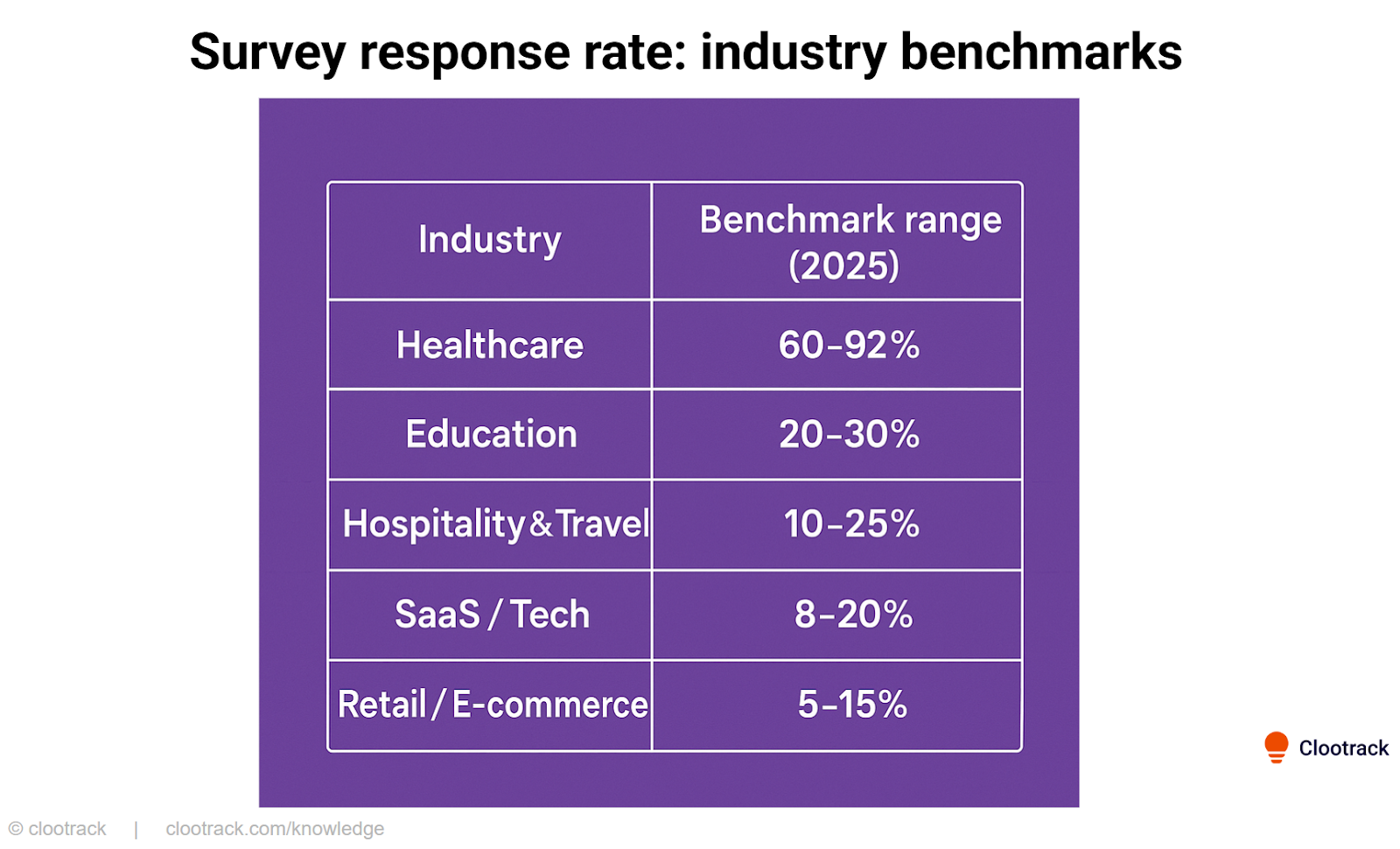

Take-away: a 22 % response would be top-quartile for retail but mediocre for healthcare. Benchmark context matters more than the absolute figure.

A higher rate does not automatically mean better data. What matters is whether the respondents mirror the total audience on the variables that influence the answers: tenure, spend, sentiment, and geography.

A 15% rate that is demographically balanced can outperform a 35% rate dominated by vocal promoters. Treat response rate as a quality signal to audit representativeness, not as a vanity KPI.

The average survey response rate in 2025 is around 20–30 %, but the only meaningful survey response rate benchmark is the one tailored to your channel, industry, and audience realities. Treat response rate as an early-warning system. An alert that your listening mechanism no longer matches how customers want to talk, then adjust channels, design, and incentives accordingly.

Adapt your VoC analytics programme to meet them where they are, and higher response rates will follow.

For external, email-based CSAT or NPS surveys, 20–30 % is the current “respectable” band in 2025. Anything above 30 % is top-quartile for most B2C sectors; in B2B SaaS, 22 % already puts you ahead of roughly three-quarters of peers. Context, however, is everything: an SMS CSAT pulse is judged against a 40–50% norm, while an always-on feedback tab is healthy at 3–5%.

Statistical validity hinges on sample size, population variance, and confidence level, not on response-rate percentage alone. For large customer bases, about 400 completed responses deliver a ±5% margin of error at 95% confidence, whether that comes from 4% of 10,000 invitees or 40% of 1,000. Smaller populations (≤ 5,000) usually need 10–15 % participation to hit the same confidence band.

A smaller, well-balanced sample beats a larger, skewed one every time.

Response rates have slipped by roughly 1–2 percentage points per year since 2019 as inbox overload, mobile friction, and privacy concerns intensified. Email completions that averaged 28% pre-pandemic now hover near 22%. One bright spot: mobile-native channels (SMS, in-app cards) have gained 5–10 points thanks to shorter formats and tap-to-answer UX.

Top 7 factors that influence your survey response rates are:

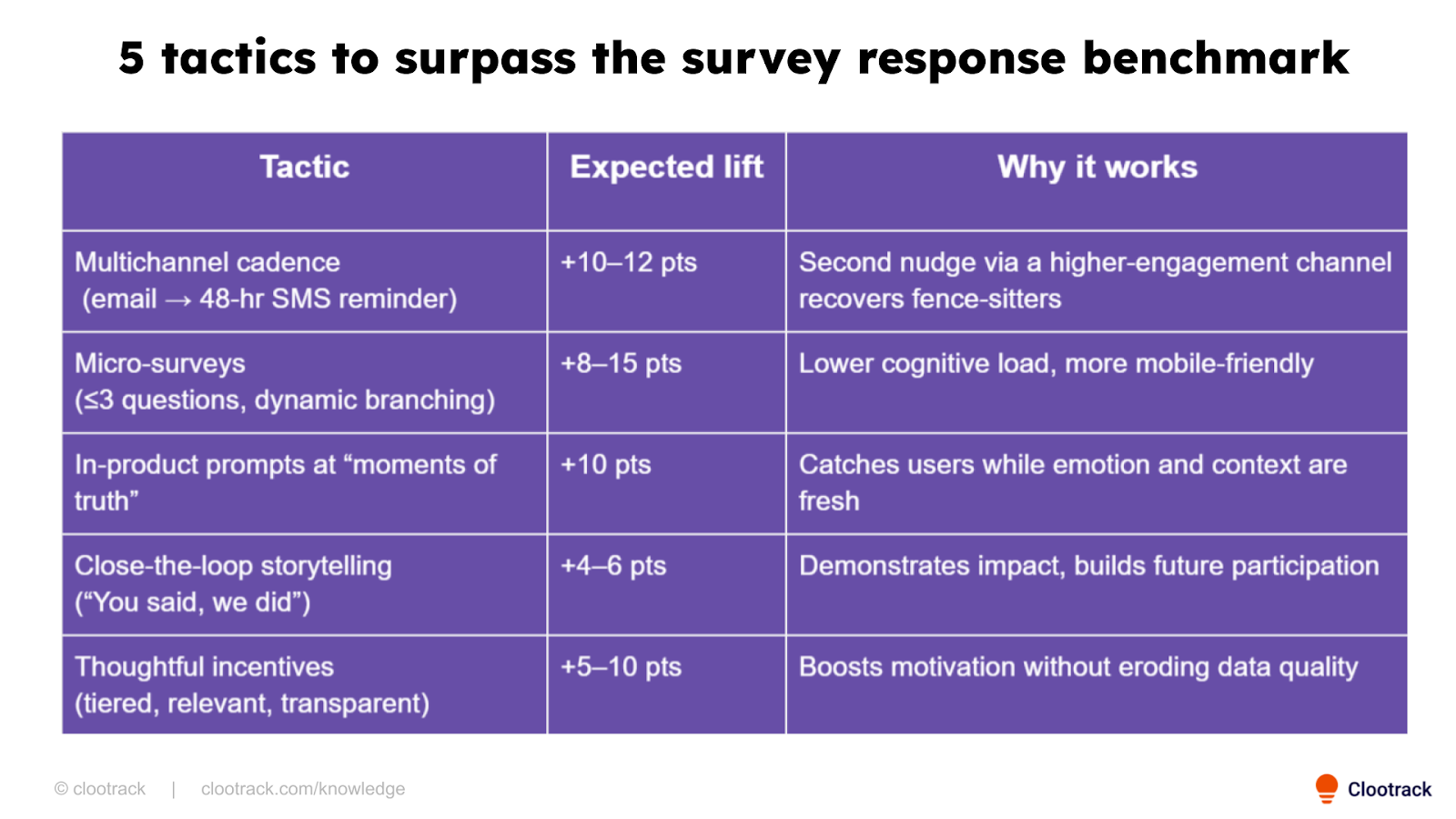

Follow these steps and you’ll usually climb a tier above the typical benchmark—while still getting honest, helpful feedback.

Analyze customer reviews and automate market research with the fastest AI-powered customer intelligence tool.