The low survey response rate crisis: 2025 guide for CX & insights leaders

In this guide

✧

Example H2

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

Customer surveys were once the gold standard for feedback. But in 2025, low survey response rates have put entire CX strategies at risk.

Whether it’s a post-purchase form, an NPS request, or a product satisfaction survey, today’s customers are less likely to engage. What was once a steady flow of insights is now a fragmented, low-volume stream of customer feedback that fails to capture the full experience.

This isn’t just a drop in participation; it’s a crisis of visibility in feedback. With fewer responses, CX and insights teams face growing gaps in understanding what drives customer sentiment, loyalty, and churn. It becomes harder to spot emerging issues, validate decisions, or drive continuous experience improvement.

For leaders focused on customer retention, product development, and brand trust, solving the low survey response rate problem is no longer optional. It’s essential to protect insight quality, stay customer-informed, and remain competitive in an increasingly real-time world.

1. Understanding survey response rates

Customer surveys are still one of the most commonly used tools in CX programs, but fewer responses mean less reliability. Before teams can fix the problem, they need clarity on what qualifies as a low response rate and how it affects the accuracy of actionable CX insights and analytics.

1.1 What is considered a low survey response rate?

A survey response rate below 10% is widely considered low in enterprise programs. In many industries, response rates for email surveys dip below 5%, while even well-designed campaigns rarely exceed 30% without personalization or incentives. SMS and in-app surveys typically perform better but still face challenges with scale and consistency.

Benchmarks vary by sector, but the downward trend is clear across B2C and B2B environments. As digital fatigue grows and customer attention fragments, traditional feedback collection methods continue to underperform.

This decline in participation doesn’t just reduce volume; it introduces a range of risks that can compromise how teams understand and act on customer feedback.

1.2 Why low response rates lead to CX blind spots

When fewer customers participate in surveys, visibility into their experiences narrows significantly. Teams may miss emerging issues, overlook friction points, or lose sight of evolving sentiment, especially across touchpoints that generate little to no direct feedback.

The problem isn’t just about scale; it’s about representativeness. Without enough input, teams struggle to see patterns with confidence. Key moments in the journey can go unnoticed, and signals from silent segments of the customer base remain hidden.

These feedback blind spots weaken the ability of CX and insights teams to stay responsive. In today’s real-time market, where customer expectations shift fast, even short-term gaps in understanding can widen into long-term CX challenges.

Enterprise teams solving this issue often adopt a modern CX analytics platform that unifies survey, behavioral, and unsolicited feedback to restore clarity and enable faster, more confident decision-making.

2. Risks of low survey response rates

When too few customers respond, it doesn’t just reduce your sample size—it undermines the structure of how your feedback system works. Insights lose their ability to guide priorities, validate assumptions, or support alignment across teams. Below are the distinct business risks that surface when your VoC program relies on incomplete, unbalanced, or inconsistent data.

2.1 Nonresponse bias – Strategic distortion from unbalanced feedback

Nonresponse bias occurs when surveys disproportionately capture feedback from highly vocal or emotionally charged customers, while the silent majority goes unheard. This skews the feedback loop, giving an outsized voice to edge cases that don’t reflect the actual experience across your customer base.

Key risks:

Roadmaps reflect extreme opinions, causing overcorrection on niche or edge-case issues

Silent majority feedback is excluded, making everyday friction invisible to CX teams

Customer personas become unreliable, as data overrepresents only reactive or high-sentiment users

Marketing and messaging are misaligned, driven by input from non-representative audiences

2.2 Reduced data quality – Weak foundation for analytics and segmentation

Low survey participation degrades the consistency and granularity of your feedback data. Without adequate volume across key segments, time periods, and channels, analytics become unstable and personalization efforts break down. What remains may look structured, but lacks the depth and accuracy to support meaningful analysis.

Key risks:

Segment-level insights collapse, preventing journey-based improvements or localization

Statistical confidence in trends erodes, making long-term tracking unstable or misleading

Data can't be trusted for automation, disrupting personalization engines and next-best-action systems

Cross-functional teams rely on inconsistent inputs, leading to misalignment between product, CX, and analytics

2.3 Unreliable insights – False confidence leads to flawed execution

When insights are drawn from narrow or skewed feedback, they create a false sense of clarity. Teams feel data-driven, but the foundation is fragile. Execution suffers—not from a lack of action, but from misplaced action based on incomplete or misleading input.

Key risks:

CX teams act with certainty on incomplete signals, increasing the risk of customer dissatisfaction

Product decisions are delayed or reversed once downstream data contradicts earlier conclusions

Retention strategies underperform, as early churn indicators are absent or buried

Executive reporting loses credibility, making it harder to secure support for more accurate voice of customer insights.

What can leaders do about these risks?

The answer isn’t just better surveys; it’s better listening. Forward-thinking brands are rebuilding their CX engines around diverse, always-on feedback, not just form-based input. When customer surveys are supported by unsolicited signals, behavioral data, and contextual inputs, the quality of insight rises dramatically.

Key risks:

Industry

Survey-only feedback

Expanded feedback (multi-source)

Retail & CPG

Fragmented view, limited context

🔼 2.5x engagement, 🔼 90% retention visibility

Banking

Missed emotional context

🔼 15% higher retention with integrated feedback

SaaS / Tech

Delayed sentiment detection

🔼 20–30% CSAT lift, 🔼 25% cross-sell conversions

Apparel / D2C

Over-reliance on post-purchase NPS

🔼 3x faster response to product friction

Industry benchmarks: Gartner, McKinsey, Gallup, Deloitte, etc.

💡 The takeaway: expanding your VoC inputs directly addresses the blind spots, biases, and execution gaps caused by low survey response rates.

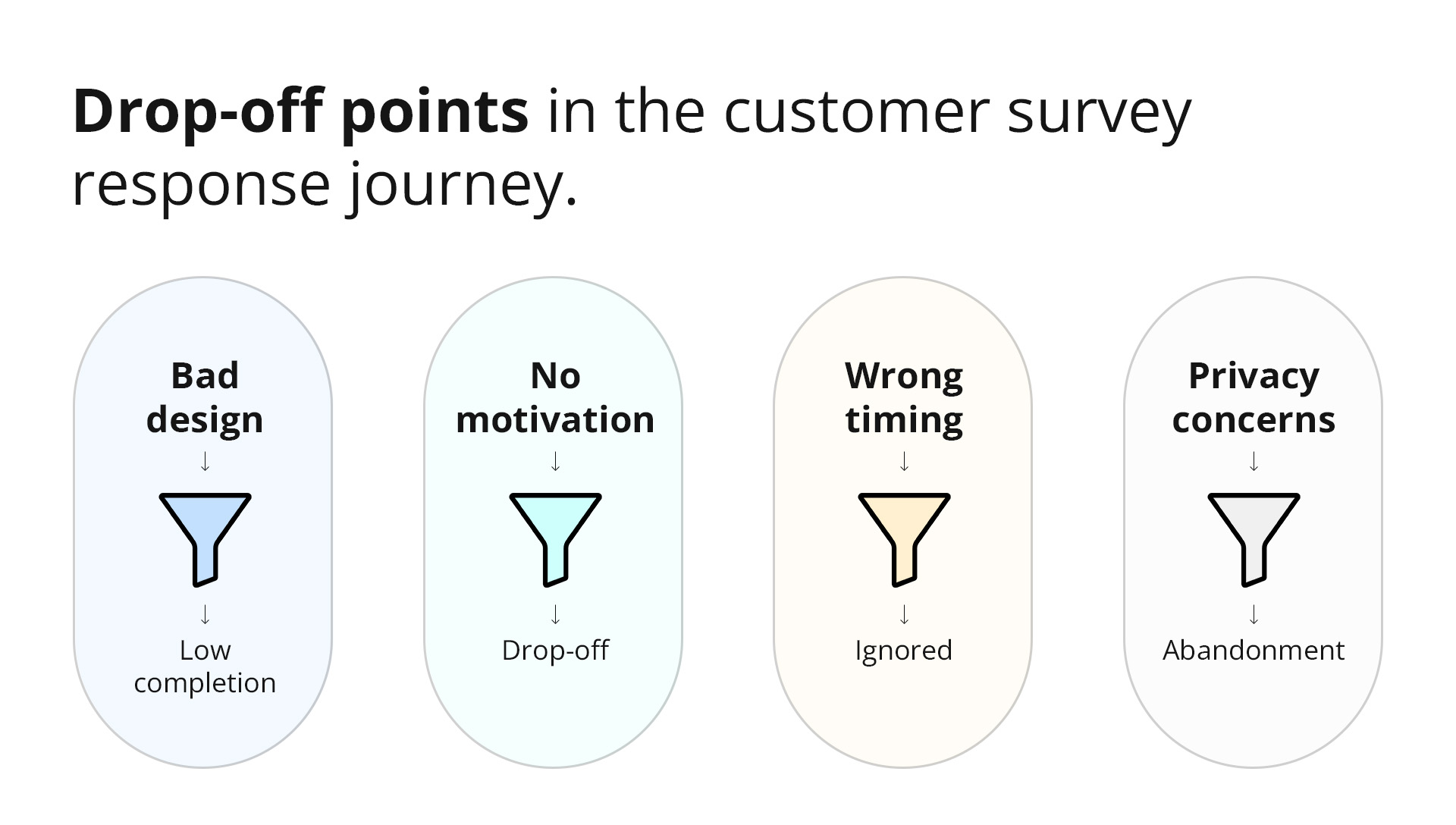

3. What are the 4 common causes of low response rates

Low survey participation is rarely due to one factor. It’s the result of missteps across design, timing, motivation, and trust, each of which erodes a customer's willingness to respond. For CX and insights leaders, addressing these issues requires a sharper understanding of what customers experience before they even choose to give feedback.

3.1 Survey design limitations – Structural flaws that discourage participation

Why it happens

When surveys are too long, overly structured, or misaligned with the customer journey, they create immediate resistance. Even loyal customers will abandon a feedback request if the format feels tedious or irrelevant.

What leaders often overlook

Survey design is frequently outsourced, standardized, or templated across regions and teams. But without adaptive logic, personalization, or platform optimization, even well-intentioned surveys feel generic and intrusive.

Signals of breakdown

Completion rates drop off sharply within the first few questions

Surveys aren’t optimized for mobile or multichannel use

Question order feels repetitive or irrelevant to the customer’s actual interaction

Multiple survey types (e.g., NPS + CSAT) are bundled together, diluting focus

3.2 Engagement and motivation factors – Low perceived value for the respondent

Why it happens

Customers often don’t respond simply because they don’t believe it matters. If brands don’t close the loop or personalize the experience, surveys become a one-way request for time, without benefit.

What leaders often overlook

Incentives and branding can’t fix a broken feedback contract. Customers need to feel their input leads to visible change or at least acknowledgement. Motivation comes from recognition, not reminders.

Signals of breakdown

Return respondents drop significantly over time

Customers mention “nothing changed” after previous feedback

Surveys feel transactional, with no thank-you or follow-up

The same users are surveyed repeatedly, leading to fatigue

3.3 Timing and delivery – Feedback requests sent at the wrong moment or channel

Why it happens

Even the best survey can fail if it’s delivered at the wrong time. Interrupting workflows or waiting too long after an interaction both lead to reduced recall, lower quality responses, or immediate dismissal.

What leaders often overlook

Timing is a CX signal. It should reflect the emotional moment of the customer, not the reporting cycle of the business. Email-only delivery ignores this entirely.

Signals of breakdown

Surveys arrive during high-friction steps (e.g., checkout, support escalation)

Feedback requests are delayed, making context hard to recall

No logic to trigger based on customer intent, emotion, or urgency

Customers ignore outreach channels that are overused or impersonal

3.4 Trust and privacy concerns – Perception of risk lowers participation

Why it happens

In today’s privacy-sensitive environment, anything that feels ambiguous, overly personal, or off-brand can signal risk. When customers aren’t confident in how their data will be used, they disengage, especially in sectors like finance, healthcare, and digital services.

What leaders often overlook

Security isn’t just about infrastructure—it’s also about perception. If branding, language, or sender identity doesn’t inspire trust, the survey may never even be opened.

Signals of breakdown

Surveys request unnecessary personal or behavioral details

The email sender's name or domain isn’t clearly tied to the brand

There’s no visible data use policy or privacy disclaimer

Customers abandon surveys mid-way after encountering sensitive questions

How AI-powered feedback analytics solves the 4 biggest drop-off causes

The root cause of low survey participation isn’t just poor design or bad timing; it’s the friction customers experience at every step. Whether it’s too many questions, impersonal outreach, inconvenient timing, or trust concerns, each layer of effort becomes a reason to ignore the survey.

AI-powered feedback analytics offers a smarter, frictionless alternative. Instead of asking customers to respond, it listens where they’re already talking, turning everyday interactions into structured, actionable insights.

Here’s how it addresses the biggest drop-off challenges:

📍 No more form fatigue

Rather than relying on long, rigid survey formats, AI analyzes open-ended feedback from real interactions, like support conversations, reviews, and chat logs, removing the need for structured forms entirely.

📍 Higher engagement without effort

Because customers aren’t asked to do anything extra, participation isn’t dependent on motivation. AI picks up authentic, unsolicited feedback from organic touchpoints, where customers are naturally expressive.

📍 Right-time signals, not delayed prompts

Instead of waiting for survey triggers, AI extracts feedback in the moment, detecting emotional cues and key topics as they happen. This eliminates the lag and drop-offs tied to poorly timed outreach.

📍 Built-in trust and low perceived risk

By avoiding direct data requests and respecting user privacy, AI-based feedback systems bypass the trust barriers that often cause customers to withhold input.

Clootrack combines all these elements in a unified CX analytics engine, and helps teams operationalize this shift, capturing unprompted feedback from dozens of sources without disrupting the journey.

For CX leaders, this means fewer drop-offs, deeper signals, and a smarter strategy, without adding survey pressure to already stretched customers.

4. How low survey response rates impact CX strategy and business performance

Most CX leaders track survey metrics, but few recognize how declining participation reshapes internal execution. When customer signals are delayed, incomplete, or inconsistent, it breaks the rhythm of how decisions get made. Without a strong feedback engine, teams slow down, alignment frays, and the ability to defend CX investment weakens.

These are not isolated issues; they compound over time, reducing the speed, cohesion, and impact of customer-centric strategies. Below are the most critical consequences of low survey participation at the organizational level.

4.1 Reduced CX decision velocity – Feedback delays break execution cycles

Why it matters

Timely decisions depend on fresh, reliable signals. When survey input lags or shrinks, teams lose the ability to respond quickly, test ideas, or validate friction points. The feedback loop slows, and so does the business.

Implications for leaders

Initiatives stall in pre-launch stages due to the lack of real-world validation

Sprint retros lose customer context, weakening agile responsiveness

Testing roadmaps drag out as signals trickle in instead of flowing in real time

Delayed detection of shifting sentiment erodes competitive timing

4.2 Fragmented customer understanding – Cross-functional clarity breaks down

Why it matters

When feedback lacks breadth or consistency, teams across the business develop siloed views of the customer. Product, marketing, CX, and analytics operate from misaligned assumptions, resulting in disconnected experiences and missed opportunities.

Implications for leaders

GTM, CX, and product leaders work off separate, uncoordinated customer narratives

Support and UX teams prioritize fixes that don’t ladder up to shared KPIs

Research duplication rises as each team re-asks the same customer questions

Executive decisions are slow as leaders debate “what the customer actually wants.”

4.3 Weak CX ROI visibility – Low data volume weakens business cases

Why it matters

Without enough structured feedback, it becomes difficult to link CX actions to business outcomes. Leaders struggle to prove that customer initiatives are working, making it harder to defend budgets, drive roadmap changes, or influence company-wide priorities.

Implications for leaders

Feedback data doesn’t tie clearly to metrics like NPS lift, churn reduction, or LTV

OKRs and quarterly reviews lack insight-backed narratives

Budget negotiations favor teams with stronger performance attribution (e.g., sales, ops)

CX credibility erodes when leadership can’t connect initiatives to measurable wins

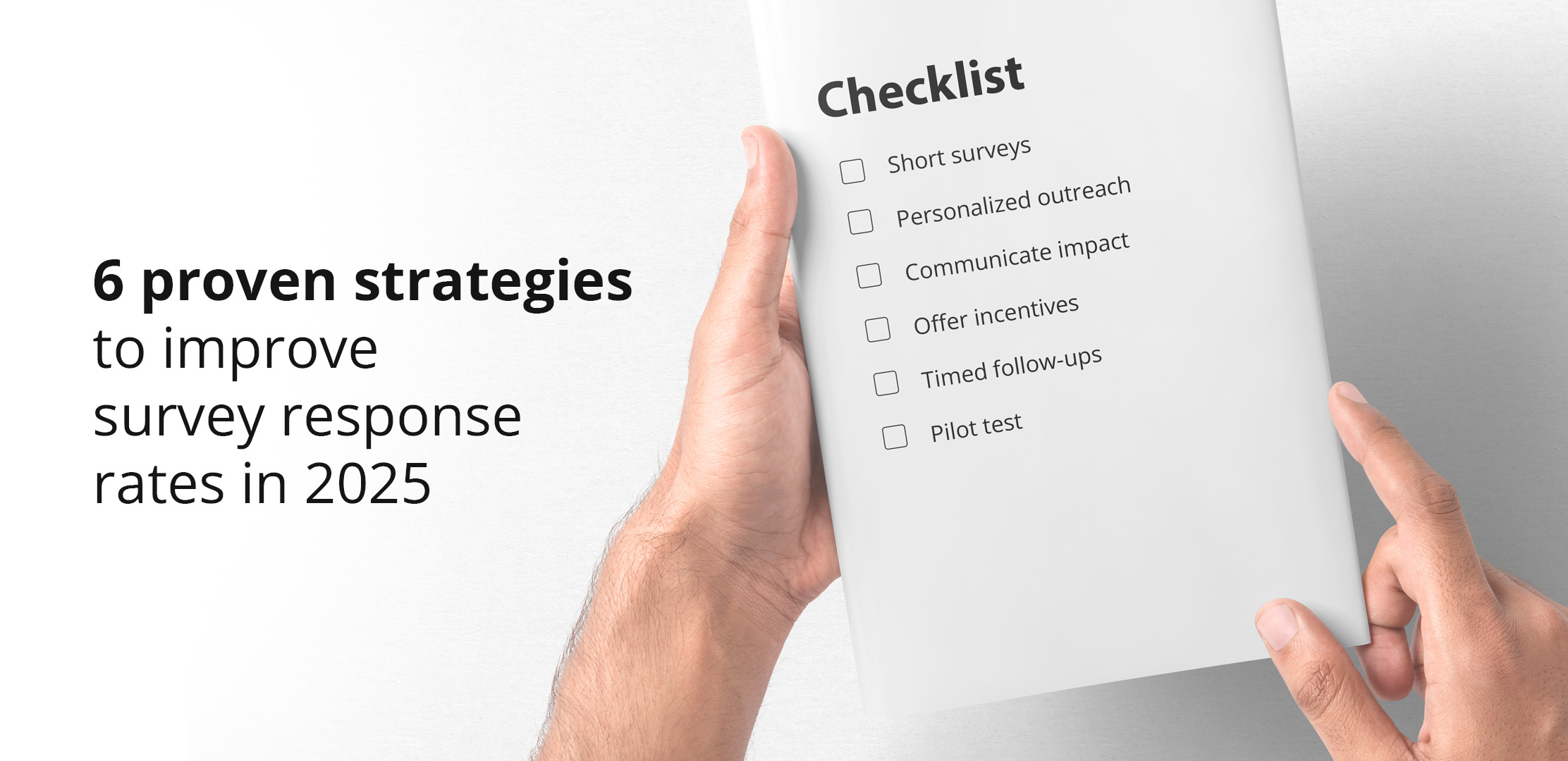

5. What are the 6 strategies to improve survey response rates

CX teams can’t afford to treat low survey response as a static metric. It’s a solvable problem rooted in how your customer feedback analytics system is designed, delivered, and optimized for engagement.

Below are six proven tactics that help leaders rebuild participation, restore feedback visibility, and regain confidence in customer-led decisions.

5.1 Optimize survey design

Surveys that are too long or poorly structured turn customers away. Focus on short, clear, and relevant questions that reflect the customer's experience. Use skip logic to avoid asking unnecessary questions and ensure the design is easy to complete on mobile. Small changes in format can lead to major gains in completion rates.

5.2 Personalize survey outreach

Generic survey requests often get ignored. Personalizing the subject line, sender name, and message based on the customer’s recent activity helps the request feel more relevant. For example, referencing a recent purchase or support interaction can lift response rates significantly without needing complex tools.

5.3 Communicate purpose and impact

Customers are more likely to respond when they understand why the survey matters. Let them know how customer feedback will be used and what CX improvements it could lead to. Showing past improvements made from similar input helps build trust and makes the request feel more worthwhile.

5.4 Offer appropriate incentives

The right incentive shows appreciation and increases participation. This doesn’t have to be a big offer; discount codes, giveaways, or early access work well. The key is to offer something simple and relevant that gives customers a reason to take action without influencing the honesty of their responses.

5.5 Use well-timed follow-ups

Sending a reminder 2–3 days after the initial survey can lift response rates by over 30%, especially when the first request is missed or ignored. The key is to space follow-ups strategically, keep them short and respectful, and clearly state how long the survey takes. Poorly timed or excessive nudges can frustrate customers and hurt brand perception.

5.6 Pilot test the survey

Testing with a small group before launch helps spot weak points in design, language, or delivery of the survey. Leaders can identify where drop-offs occur and improve the flow before scaling. This step ensures the final version collects higher-quality customer feedback and avoids preventable misfires.

6. Beyond surveys: alternative methods for capturing customer feedback

Surveys alone can no longer support the pace or complexity of today’s CX landscape. Customers interact with brands across dozens of digital and physical touchpoints, often leaving feedback without being prompted. To stay ahead, CX and insights leaders must expand their toolkit, moving from isolated survey campaigns to an always-on feedback infrastructure that reflects real customer behavior, at scale.

6.1 The limitations of survey-only approaches

Surveys miss what customers don’t explicitly say. They depend on participation, introduce self-selection bias, and often fail to capture emotion, urgency, or context. Worse, many surveys ask predefined questions that frame the response, leaving little room for unexpected insights. This results in data that’s narrow, reactive, and slow to surface emerging issues.

6.2 Tapping into unsolicited feedback

Unsolicited feedback, found in support chats, social media, app reviews, and online forums, is often more candid and emotionally rich than survey data. It reflects customer pain points and delight in the moment, without the filters of question structure or timing. By listening to what customers share on their own terms, brands gain unprompted insight into loyalty drivers, churn signals, and unmet needs.

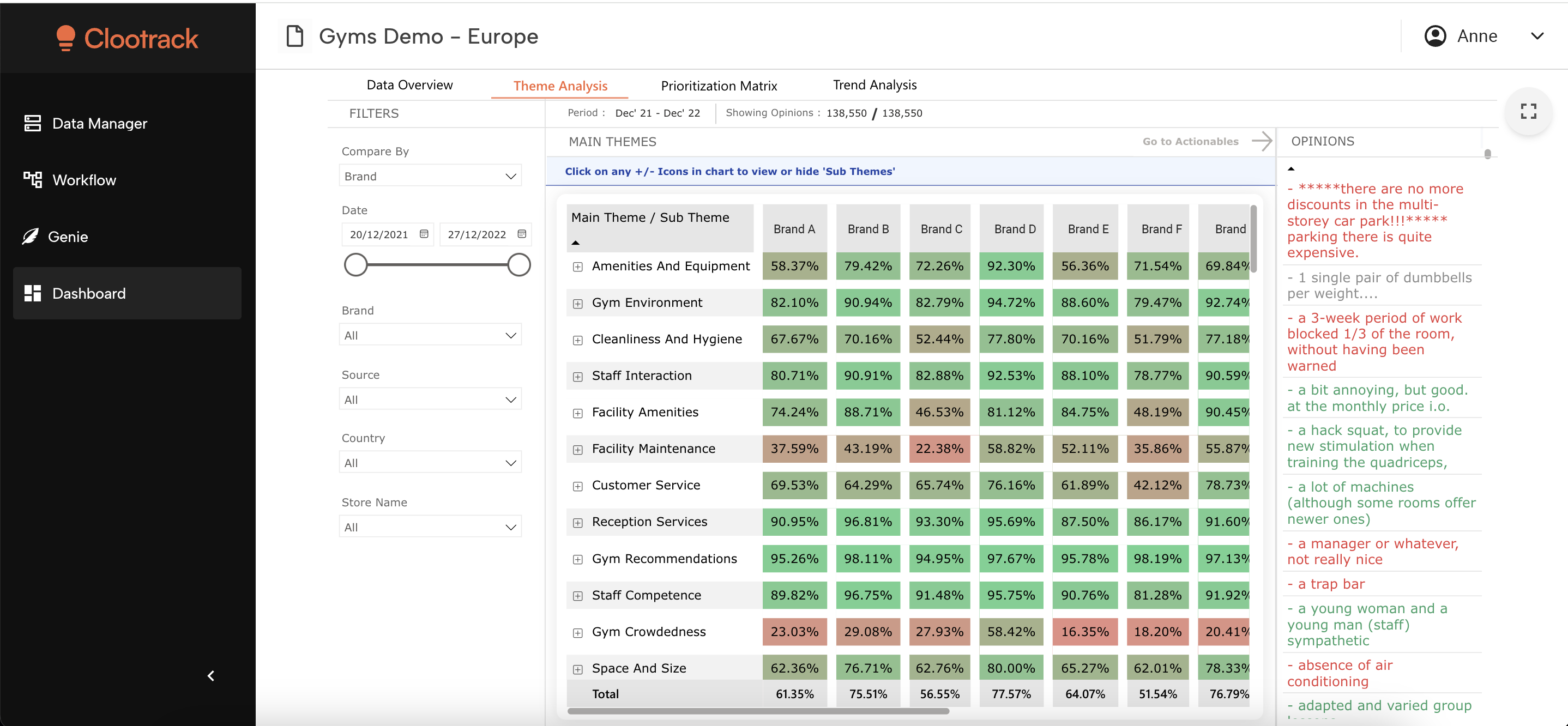

6.3 Leveraging AI for unstructured feedback analysis

Manually analyzing open-ended feedback at scale is impossible, but AI makes it not only feasible but fast and accurate. With unsupervised NLP models, teams can detect themes, sentiment shifts, and critical topics across thousands of data points in real time. Modern AI systems can also filter noise, link feedback to specific touchpoints, and surface trends missed by traditional tools. For CX leaders, this means faster insight cycles, deeper visibility, and decisions grounded in the full voice of the customer.

Clootrack goes further by delivering actionable VoC insights from diverse data sources, in over 55 languages, with 98% sentiment and 96.5% theme detection accuracy. That means zero tagging, zero guesswork, and always-on insights ready to drive confident CX action.

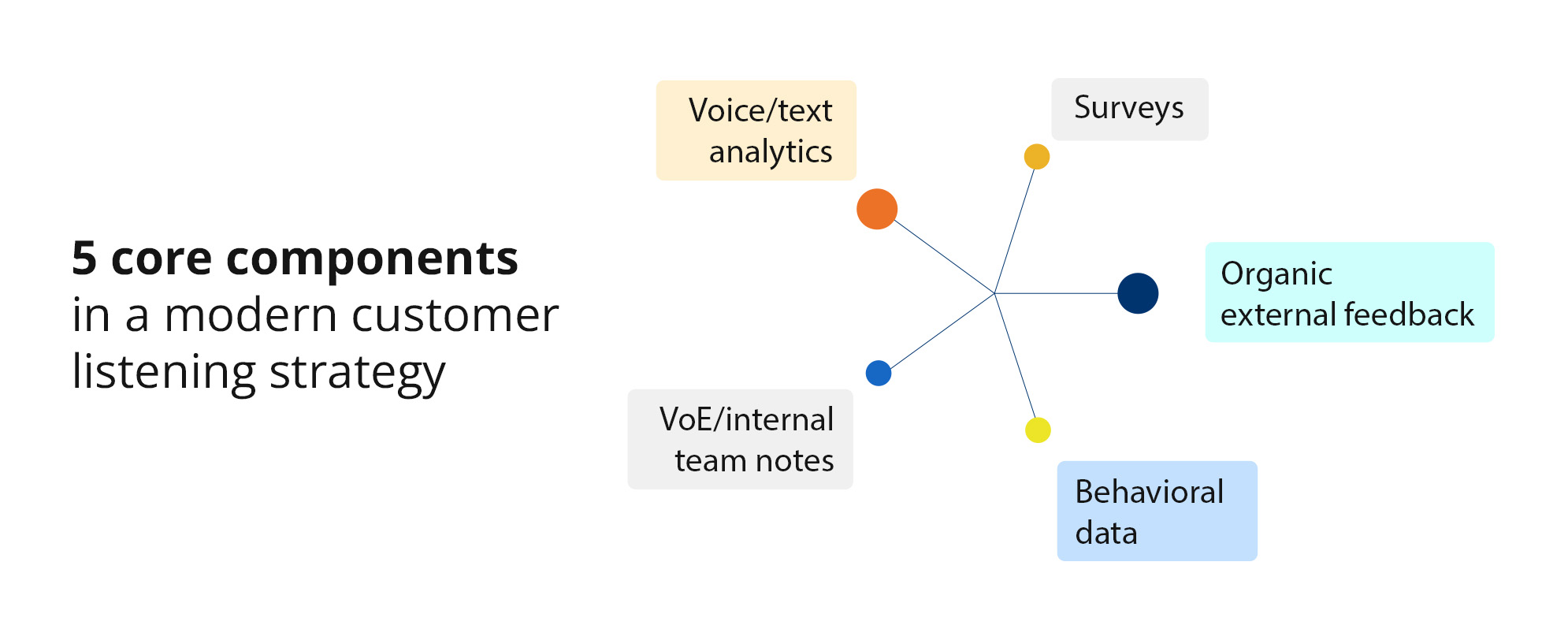

7. A multi-channel listening framework for CX leaders

As survey participation declines, CX leaders need a broader customer feedback strategy—one that captures the full spectrum of signals: solicited and unsolicited, structured and unstructured, direct and indirect.

A listening framework powered by AI-powered voice of customer analytics tools shifts CX teams from reactive feedback collection to proactive signal detection. It strengthens decisions by integrating diverse sources into a unified, real-time view of customer sentiment, behavior, and experience.

Core pillars of a high-performing listening framework:

🔹 Structured feedback collection

Surveys, reviews, and in-app forms still play a role, but they should validate, not dominate. These tools help confirm patterns found in unsolicited feedback and support baseline metrics like NPS or CSAT.

🔹 External organic feedback

Pull insights from social media, app reviews, forums, and support chats. These channels surface raw, real-time sentiment that customers won’t share through structured forms. They’re also the first indicators of churn risk or product friction.

🔹 Operational and behavioral data

Signals like feature usage, repeat visits, or churn events reveal how customers behave, not just what they say. This data fills in gaps left by surveys and helps teams act on intent, not just opinion.

🔹 Voice and text analytics

An AI-powered customer intelligence platform analyzes call transcripts, chats, and open-text feedback to uncover sentiment, urgency, and emerging issues. This turns messy qualitative data into scalable, decision-ready insight.

🔹 Internal team inputs

Frontline teams observe emerging issues before they appear in data. Incorporate voice-of-employee (VoE) and agent notes to surface contextual insights from those closest to the customer.

The most resilient CX strategies don’t rely on one feedback source. They build a listening infrastructure that’s always on, always learning, and deeply contextual.

8. Strategic takeaways for solving the low survey response crisis

Low survey response rates are no longer just a data quality issue—they’re a strategic obstacle to delivering customer-led growth.

When leaders rely on limited participation to guide decisions, they invite blind spots, misalignment, and execution delays. And in today’s real-time CX landscape, those gaps compound quickly. The brands that pull ahead are those that treat this problem not as a measurement glitch but as a signal to evolve.

That evolution starts with a mindset. High-performing teams stop optimizing surveys in isolation and start redesigning their entire listening ecosystem. They blend solicited with unsolicited input, structured with behavioral data, and human intuition with AI-powered interpretation. They also reframe feedback as a live, continuous flow—one that should meet customers where they are, not where dashboards expect them to be.

Leaders who act decisively on this front don’t just increase response rates. They transform how their organization understands and serves customers. And in a market defined by expectations, trust, and immediacy, that transformation is what turns insight into impact.

For enterprises ready to make that leap, Clootrack offers a faster path forward, unifying structured and unstructured signals across channels to unlock deeper CX clarity.

Learn how -> Request a personalized demo!

Frequently asked questions (FAQs)

What is considered a low survey response rate in CX programs?

In customer experience programs, any survey response rate below 10% is widely considered low, and if it falls under 5%, it's a critical red flag. At this level, the feedback is unlikely to reflect the broader customer base, and results can become statistically unreliable. Low rates increase the risk of bias, especially if only extreme or highly engaged customers are responding. For enterprise teams, this is a clear signal to revisit your feedback collection methods and expand listening channels.

Can I still use survey data if response rates are low?

Yes, but only with caution. Low-response data can reveal surface-level patterns, but it should never be treated as a standalone source of truth. CX leaders should treat these insights as directional, not definitive. Always validate through alternative data streams such as customer support interactions, product usage analytics, or social listening. The goal is to triangulate insights and reduce the risk of making decisions based on incomplete or skewed data.

Why do B2C/D2C response rates tend to be lower than B2B?

B2C survey response rates often range between 5%–15%, while B2B can reach 23% to 30% or more, depending on industry and relationship strength. This gap is due to a few key factors:

B2C customers typically have less invested relationships with brands.

There’s lower perceived value in participating.

Privacy and data fatigue discourage engagement.

In contrast, B2B stakeholders often view surveys as part of a strategic partnership and are more inclined to provide feedback, especially when the feedback loop is closed and visible improvements are made.

Do reminder emails really increase survey response rates?

Yes, significantly. Sending a follow-up reminder 2–3 days after the initial request can boost response rates by up to 30% according to industry benchmarks. Reminders are especially effective when the first message was missed or ignored, not when customers actively chose not to respond. Keep reminders short, clearly state the time required to complete the survey, and avoid sounding pushy. Even a small uptick in responses from reminders can meaningfully improve data quality.

How long should CX surveys be to maximize participation?

Surveys should be under 5 minutes, ideally with no more than 8–10 well-crafted questions. Participation drops sharply in longer formats, especially on mobile. CX leaders should prioritize quality over quantity: focus on a few impactful questions, use logic to skip irrelevant ones, and test the format before going live. Remember, customers are more likely to respond when the survey feels fast, relevant, and respectful of their time.

Do you know what your customers really want?

Analyze customer feedback and automate market research with the fastest AI-powered customer intelligence tool.